Technical Update #12: Building an article database for Clearpill and BSA for Fine Tuning pipeline using CNN etc.

Advanced Data Processing: Building a Robust Article Database for Fine-Tuning Pipelines

As we continue our mission of advancing AI capabilities through innovative data management and processing techniques, we present our latest technical update.

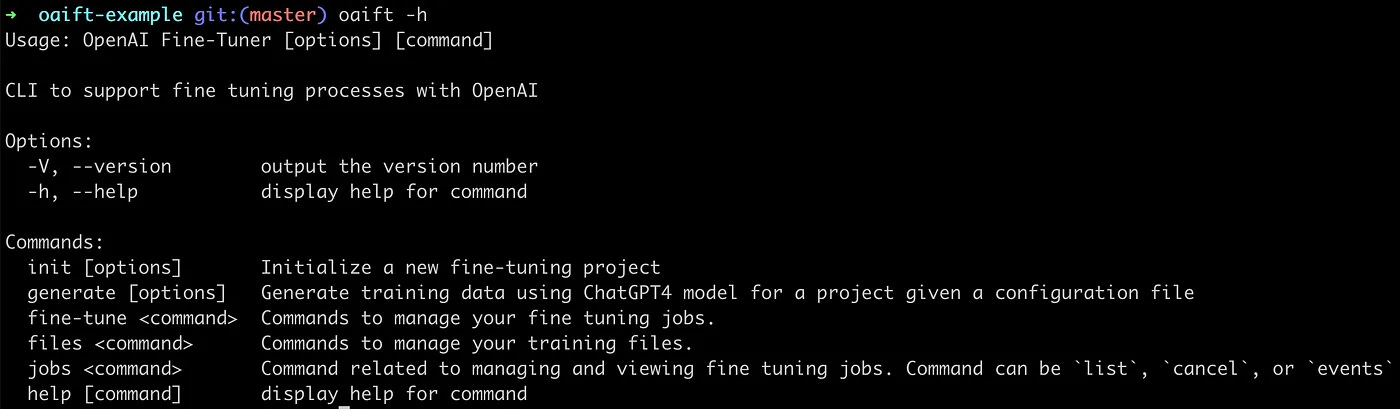

This week we'll explore how we're leveraging HuggingFace, Supabase, and Kedro to build a comprehensive article database for Clearpill and BSA, enhancing our fine-tuning pipeline for CNN models.

What are HuggingFace, Supabase, and Kedro, and how are we utilizing them?

HuggingFace is a leading platform for natural language processing models and datasets. By integrating HuggingFace into our workflow, we're accessing state-of-the-art models and techniques for processing and analyzing our article data.

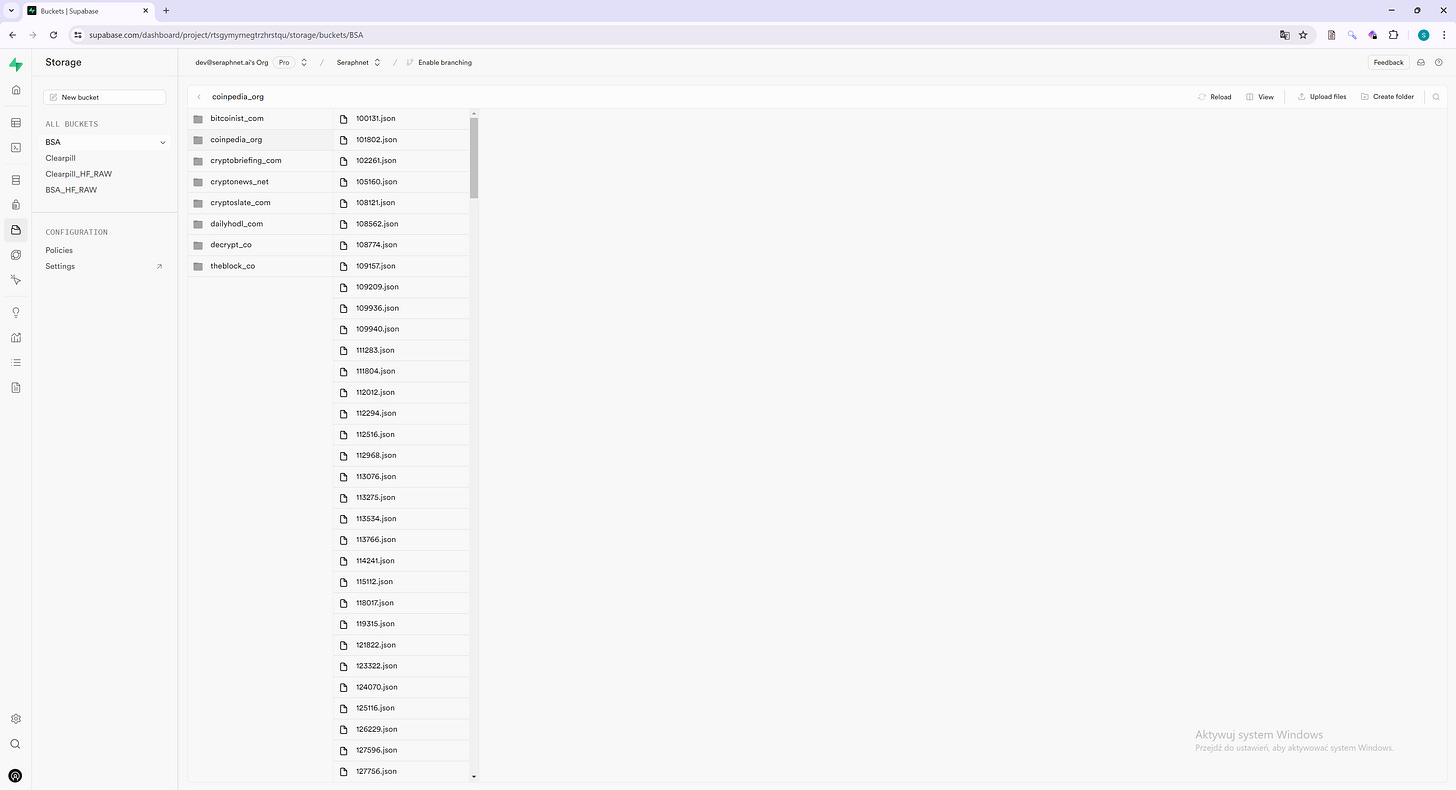

Supabase, an open-source Firebase alternative, serves as our robust and scalable database solution. It provides us with the necessary tools to efficiently store, manage, and retrieve our processed article data.

Kedro, a production-ready data science framework, is being utilized to create modular, maintainable, and reproducible data pipelines. Its PySpark integration allows us to process large volumes of data efficiently.

Our article database building process offers several key benefits:

Data Cleaning and Preprocessing

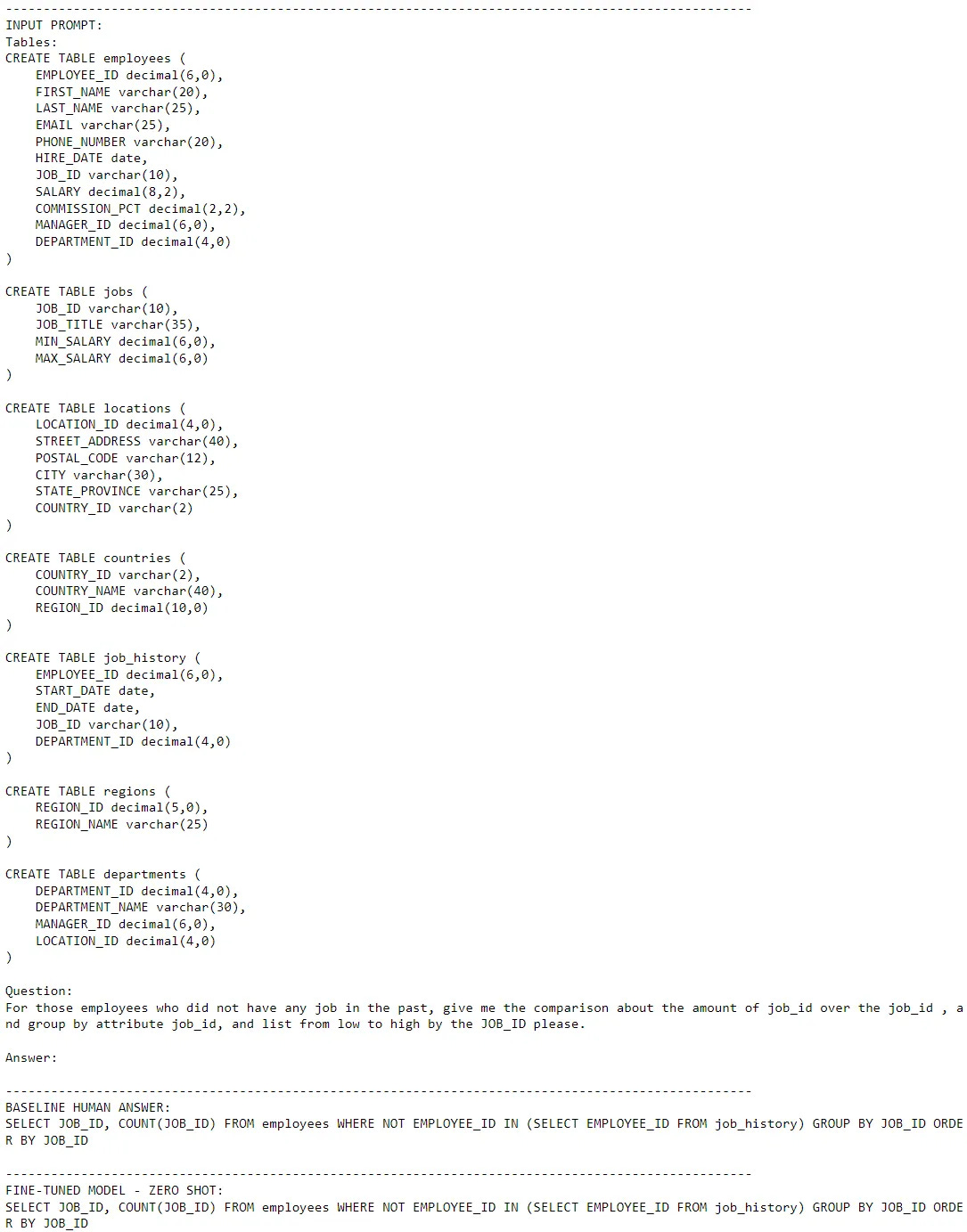

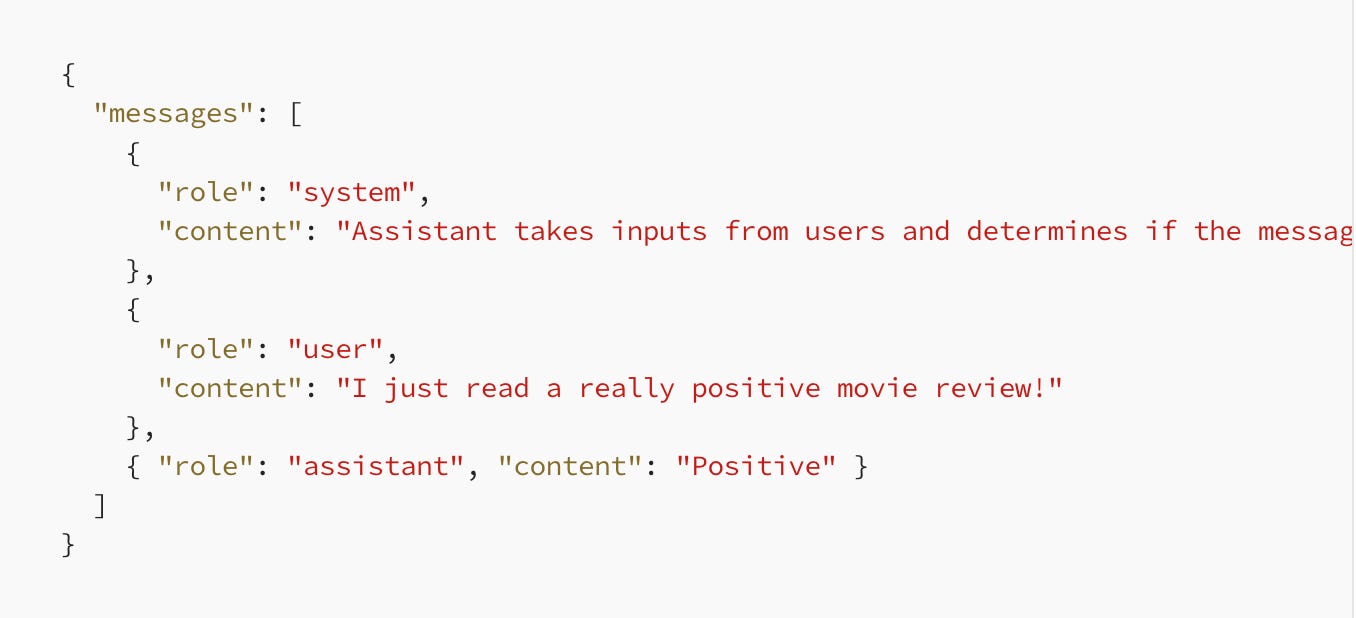

Building a high-quality dataset for fine-tuning requires meticulous data preparation. Our process begins with duplicate removal to ensure a clean, unique dataset. We handle missing data through imputation or record removal, and perform noise reduction to filter out irrelevant content like advertisements. To enhance model training diversity, we implement synonym replacement and back translation techniques. This creates variations of the same text, providing our models with a richer training dataset.Efficient Database Schema and Management

We've designed an efficient and scalable database schema using Supabase, including tables for articles, metadata, tags, and processed data. Our schema is normalized to reduce redundancy and ensure data integrity, with implemented indexing strategies to optimize query performance. We've developed scripts for batch processing and ingesting large volumes of articles, as well as mechanisms for real-time ingestion of new content. Version control of the dataset is maintained, allowing us to track changes and updates over time.

Data Security and Accessibility

Data security is a top priority. We've implemented regular backup procedures and recovery plans to prevent data loss. Our database is secured through encryption, strict access controls, and adherence to data protection regulations. To facilitate seamless integration between the database and our machine learning pipeline, we've developed a robust data access layer (API). This allows for automated data retrieval processes, feeding our CNN models with the latest articles from the database.

Enhancing Model Performance through Advanced Data Processing

At Seraphnet, we're committed to pushing the boundaries of AI model performance. Our advanced data processing techniques play a crucial role in this endeavor.

Data Augmentation

Our synonym replacement and back translation techniques not only increase the diversity of our training data but also help our models become more robust to variations in language use. This is particularly beneficial for fine-tuning language models to understand and generate human-like text across various domains.Efficient Data Splitting

We've implemented a strategic approach to data splitting. Our dataset is divided into training and testing subsets to evaluate model performance accurately. Additionally, we create a validation set from the training data, which is crucial for tuning model hyperparameters and preventing overfitting.Continuous Monitoring and Optimization

We've set up monitoring tools to track database performance and identify potential issues proactively. Regular maintenance tasks, such as indexing and cleanup, are scheduled to ensure optimal performance of our database and, by extension, our fine-tuning pipeline.

Embracing HuggingFace, Supabase, and Kedro to Revolutionize Fine-Tuning Pipelines

The integration of HuggingFace, Supabase, and Kedro into our article database building process represents a significant advancement in Seraphnet's journey to revolutionize AI model fine-tuning. By leveraging these powerful tools, we're not only enhancing our own capabilities but also contributing to the broader advancement of efficient and effective AI model training.

These technologies allow us to focus on what we do best: developing cutting-edge AI applications that push the boundaries of what's possible. From enhancing our CNN models for unbiased outputs to exploring new frontiers in natural language processing, we're committed to driving innovation and shaping the future of AI technology.

Stay informed about our ongoing technical advancements. Together, we're building a future where AI is not only powerful but also adaptable, efficient, and capable of understanding and generating human-like text across diverse domains.

Additional information regarding our technical advancements can be accessed by subscribing to our Substack newsletter.